Lisp Has Average Performance in Google AI Competition

December 09, 2010

An r-chart blog post analyzed the Google AI Competition rankings. Paul Graham’s strong advocacy for Lisp made the natural question obvious: did Lisp actually outperform other languages?

The naive question – “Is Lisp better?” – can’t be answered from competition rankings due to selection bias. Average programmers don’t enter AI competitions. A narrower question works: among competition entrants, how did Lisp and Java Elo ratings compare?

I scraped the rankings using Enlive (data here) and found that the average Elo across all programs was about 2030, while Java programs averaged close to 2060 – well within one standard deviation. Java programs are pretty average.

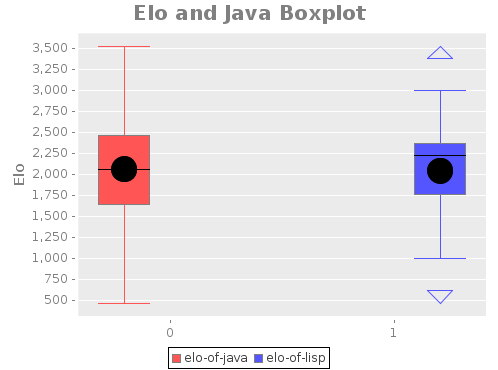

The average Lisp program’s Elo was 2040 – lower than Java. Not what Paul Graham’s essays would predict. But averages hide structure. A box plot tells a more interesting story:

The competition winner was a Lisp program, but it was an outlier – which undercuts the language attribution that followed the announcement. The median Lisp program ranked above average, but the worst Lisp entries were bad enough to drag the mean below Java. The density diagram from the original analysis supports this: Lisp had a wider spread with more extreme tails.

The competition didn’t show Lisp to be special. It showed that language choice matters less than programmer skill, and that outliers drive narratives more than distributions do.